Guangjin Pan

Sidelink Positioning: Standardization Advancements, Challenges and Opportunities

Dec 31, 2025Abstract:With the integration of cellular networks in vertical industries that demand precise location information, such as vehicle-to-everything (V2X), public safety, and Industrial Internet of Things (IIoT), positioning has become an imperative component for future wireless networks. By exploiting a wider spectrum, multiple antennas and flexible architectures, cellular positioning achieves ever-increasing positioning accuracy. Still, it faces fundamental performance degradation when the distance between user equipment (UE) and the base station (BS) is large or in non-line-of-sight (NLoS) scenarios. To this end, the 3rd generation partnership project (3GPP) Rel-18 proposes to standardize sidelink (SL) positioning, which provides unique opportunities to extend the positioning coverage via direct positioning signaling between UEs. Despite the standardization advancements, the capability of SL positioning is controversial, especially how much spectrum is required to achieve the positioning accuracy defined in 3GPP. To this end, this article summarizes the latest standardization advancements of 3GPP on SL positioning comprehensively, covering a) network architecture; b) positioning types; and c) performance requirements. The capability of SL positioning using various positioning methods under different imperfect factors is evaluated and discussed in-depth. Finally, according to the evolution of SL in 3GPP Rel-19, we discuss the possible research directions and challenges of SL positioning.

Semantic Communication for Rate-Limited Closed-Loop Distributed Communication-Sensing-Control Systems

Dec 22, 2025

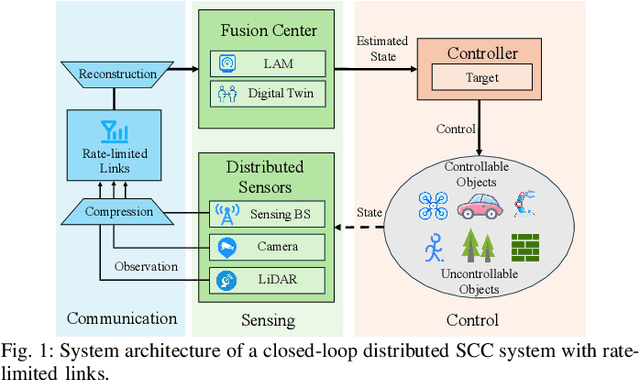

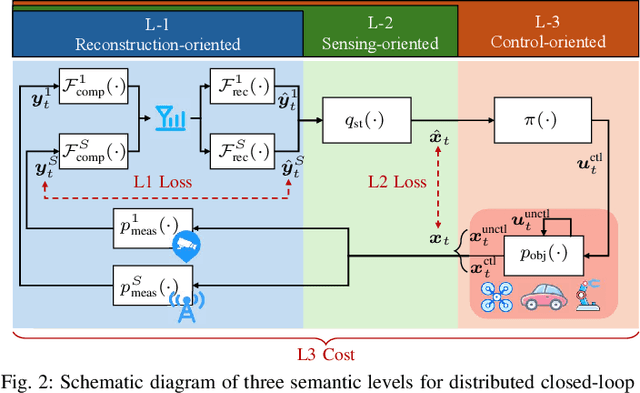

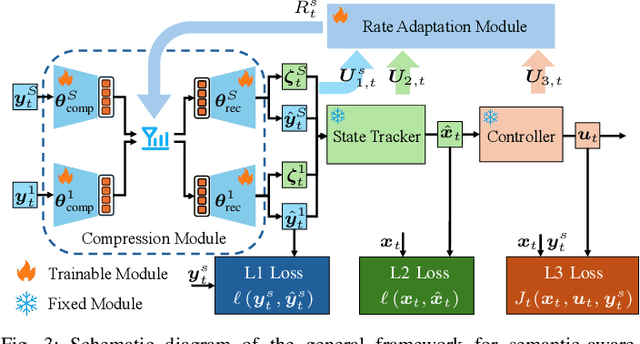

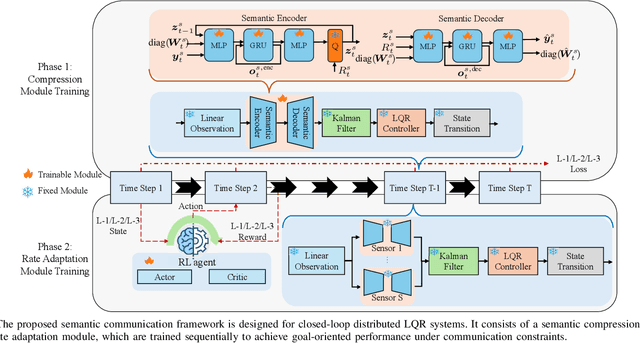

Abstract:The growing integration of distributed integrated sensing and communication (ISAC) with closed-loop control in intelligent networks demands efficient information transmission under stringent bandwidth constraints. To address this challenge, this paper proposes a unified framework for goal-oriented semantic communication in distributed SCC systems. Building upon Weaver's three-level model, we establish a hierarchical semantic formulation with three error levels (L1: observation reconstruction, L2: state estimation, and L3: control) to jointly optimize their corresponding objectives. Based on this formulation, we propose a unified goal-oriented semantic compression and rate adaptation framework that is applicable to different semantic error levels and optimization goals across the SCC loop. A rate-limited multi-sensor LQR system is used as a case study to validate the proposed framework. We employ a GRU-based AE for semantic compression and a PPO-based rate adaptation algorithm that dynamically allocates transmission rates across sensors. Results show that the proposed framework effectively captures task-relevant semantics and adapts its resource allocation strategies across different semantic levels, thereby achieving level-specific performance gains under bandwidth constraints.

UNILocPro: Unified Localization Integrating Model-Based Geometry and Channel Charting

Oct 31, 2025Abstract:In this paper, we propose a unified localization framework (called UNILocPro) that integrates model-based localization and channel charting (CC) for mixed line-of-sight (LoS)/non-line-of-sight (NLoS) scenarios. Specifically, based on LoS/NLoS identification, an adaptive activation between the model-based and CC-based methods is conducted. Aiming for unsupervised learning, information obtained from the model-based method is utilized to train the CC model, where a pairwise distance loss (involving a new dissimilarity metric design), a triplet loss (if timestamps are available), a LoS-based loss, and an optimal transport (OT)-based loss are jointly employed such that the global geometry can be well preserved. To reduce the training complexity of UNILocPro, we propose a low-complexity implementation (called UNILoc), where the CC model is trained with self-generated labels produced by a single pre-training OT transformation, which avoids iterative Sinkhorn updates involved in the OT-based loss computation. Extensive numerical experiments demonstrate that the proposed unified frameworks achieve significantly improved positioning accuracy compared to both model-based and CC-based methods. Notably, UNILocPro with timestamps attains performance on par with fully-supervised fingerprinting despite operating without labelled training data. It is also shown that the low-complexity UNILoc can substantially reduce training complexity with only marginal performance degradation.

Active Inference Framework for Closed-Loop Sensing, Communication, and Control in UAV Systems

Sep 17, 2025Abstract:Integrated sensing and communication (ISAC) is a core technology for 6G, and its application to closed-loop sensing, communication, and control (SCC) enables various services. Existing SCC solutions often treat sensing and control separately, leading to suboptimal performance and resource usage. In this work, we introduce the active inference framework (AIF) into SCC-enabled unmanned aerial vehicle (UAV) systems for joint state estimation, control, and sensing resource allocation. By formulating a unified generative model, the problem reduces to minimizing variational free energy for inference and expected free energy for action planning. Simulation results show that both control cost and sensing cost are reduced relative to baselines.

Large Wireless Localization Model (LWLM): A Foundation Model for Positioning in 6G Networks

May 15, 2025Abstract:Accurate and robust localization is a critical enabler for emerging 5G and 6G applications, including autonomous driving, extended reality (XR), and smart manufacturing. While data-driven approaches have shown promise, most existing models require large amounts of labeled data and struggle to generalize across deployment scenarios and wireless configurations. To address these limitations, we propose a foundation-model-based solution tailored for wireless localization. We first analyze how different self-supervised learning (SSL) tasks acquire general-purpose and task-specific semantic features based on information bottleneck (IB) theory. Building on this foundation, we design a pretraining methodology for the proposed Large Wireless Localization Model (LWLM). Specifically, we propose an SSL framework that jointly optimizes three complementary objectives: (i) spatial-frequency masked channel modeling (SF-MCM), (ii) domain-transformation invariance (DTI), and (iii) position-invariant contrastive learning (PICL). These objectives jointly capture the underlying semantics of wireless channel from multiple perspectives. We further design lightweight decoders for key downstream tasks, including time-of-arrival (ToA) estimation, angle-of-arrival (AoA) estimation, single base station (BS) localization, and multiple BS localization. Comprehensive experimental results confirm that LWLM consistently surpasses both model-based and supervised learning baselines across all localization tasks. In particular, LWLM achieves 26.0%--87.5% improvement over transformer models without pretraining, and exhibits strong generalization under label-limited fine-tuning and unseen BS configurations, confirming its potential as a foundation model for wireless localization.

Rate-Limited Closed-Loop Distributed ISAC Systems: An Autoencoder Approach

May 03, 2025

Abstract:In closed-loop distributed multi-sensor integrated sensing and communication (ISAC) systems, performance often hinges on transmitting high-dimensional sensor observations over rate-limited networks. In this paper, we first present a general framework for rate-limited closed-loop distributed ISAC systems, and then propose an autoencoder-based observation compression method to overcome the constraints imposed by limited transmission capacity. Building on this framework, we conduct a case study using a closed-loop linear quadratic regulator (LQR) system to analyze how the interplay among observation, compression, and state dimensions affects reconstruction accuracy, state estimation error, and control performance. In multi-sensor scenarios, our results further show that optimal resource allocation initially prioritizes low-noise sensors until the compression becomes lossless, after which resources are reallocated to high-noise sensors.

UNILoc: Unified Localization Combining Model-Based Geometry and Unsupervised Learning

Apr 24, 2025Abstract:Accurate mobile device localization is critical for emerging 5G/6G applications such as autonomous vehicles and augmented reality. In this paper, we propose a unified localization method that integrates model-based and machine learning (ML)-based methods to reap their respective advantages by exploiting available map information. In order to avoid supervised learning, we generate training labels automatically via optimal transport (OT) by fusing geometric estimates with building layouts. Ray-tracing based simulations are carried out to demonstrate that the proposed method significantly improves positioning accuracy for both line-of-sight (LoS) users (compared to ML-based methods) and non-line-of-sight (NLoS) users (compared to model-based methods). Remarkably, the unified method is able to achieve competitive overall performance with the fully-supervised fingerprinting, while eliminating the need for cumbersome labeled data measurement and collection.

AI-driven Wireless Positioning: Fundamentals, Standards, State-of-the-art, and Challenges

Jan 24, 2025

Abstract:Wireless positioning technologies hold significant value for applications in autonomous driving, extended reality (XR), unmanned aerial vehicles (UAVs), and more. With the advancement of artificial intelligence (AI), leveraging AI to enhance positioning accuracy and robustness has emerged as a field full of potential. Driven by the requirements and functionalities defined in the 3rd Generation Partnership Project (3GPP) standards, AI/machine learning (ML)-based positioning is becoming a key technology to overcome the limitations of traditional methods. This paper begins with an introduction to the fundamentals of AI and wireless positioning, covering AI models, algorithms, positioning applications, emerging wireless technologies, and the basics of positioning techniques. Subsequently, focusing on standardization progress, we provide a comprehensive review of the evolution of 3GPP positioning standards, with an emphasis on the integration of AI/ML technologies in recent and upcoming releases. Based on the AI/ML-assisted positioning and direct AI/ML positioning schemes outlined in the standards, we conduct an in-depth investigation of related research. we focus on state-of-the-art (SOTA) research in AI-based line-of-sight (LOS)/non-line-of-sight (NLOS) detection, time of arrival (TOA)/time difference of arrival (TDOA) estimation, and angle estimation techniques. For Direct AI/ML Positioning, we explore SOTA advancements in fingerprint-based positioning, knowledge-assisted AI positioning, and channel charting-based positioning. Furthermore, we introduce publicly available datasets for wireless positioning and conclude by summarizing the challenges and opportunities of AI-driven wireless positioning.

Energy Optimization of Multi-task DNN Inference in MEC-assisted XR Devices: A Lyapunov-Guided Reinforcement Learning Approach

Jan 05, 2025

Abstract:Extended reality (XR), blending virtual and real worlds, is a key application of future networks. While AI advancements enhance XR capabilities, they also impose significant computational and energy challenges on lightweight XR devices. In this paper, we developed a distributed queue model for multi-task DNN inference, addressing issues of resource competition and queue coupling. In response to the challenges posed by the high energy consumption and limited resources of XR devices, we designed a dual time-scale joint optimization strategy for model partitioning and resource allocation, formulated as a bi-level optimization problem. This strategy aims to minimize the total energy consumption of XR devices while ensuring queue stability and adhering to computational and communication resource constraints. To tackle this problem, we devised a Lyapunov-guided Proximal Policy Optimization algorithm, named LyaPPO. Numerical results demonstrate that the LyaPPO algorithm outperforms the baselines, achieving energy conservation of 24.79% to 46.14% under varying resource capacities. Specifically, the proposed algorithm reduces the energy consumption of XR devices by 24.29% to 56.62% compared to baseline algorithms.

Quality of Experience Oriented Cross-layer Optimization for Real-time XR Video Transmission

Apr 15, 2024

Abstract:Extended reality (XR) is one of the most important applications of beyond 5G and 6G networks. Real-time XR video transmission presents challenges in terms of data rate and delay. In particular, the frame-by-frame transmission mode of XR video makes real-time XR video very sensitive to dynamic network environments. To improve the users' quality of experience (QoE), we design a cross-layer transmission framework for real-time XR video. The proposed framework allows the simple information exchange between the base station (BS) and the XR server, which assists in adaptive bitrate and wireless resource scheduling. We utilize the cross-layer information to formulate the problem of maximizing user QoE by finding the optimal scheduling and bitrate adjustment strategies. To address the issue of mismatched time scales between two strategies, we decouple the original problem and solve them individually using a multi-agent-based approach. Specifically, we propose the multi-step Deep Q-network (MS-DQN) algorithm to obtain a frame-priority-based wireless resource scheduling strategy and then propose the Transformer-based Proximal Policy Optimization (TPPO) algorithm for video bitrate adaptation. The experimental results show that the TPPO+MS-DQN algorithm proposed in this study can improve the QoE by 3.6% to 37.8%. More specifically, the proposed MS-DQN algorithm enhances the transmission quality by 49.9%-80.2%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge